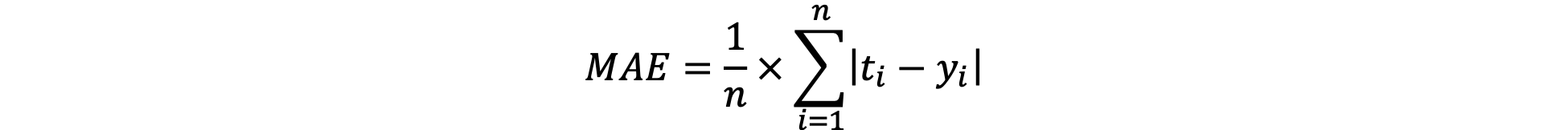

Definition: The average absolute difference between targeted and actual model values.

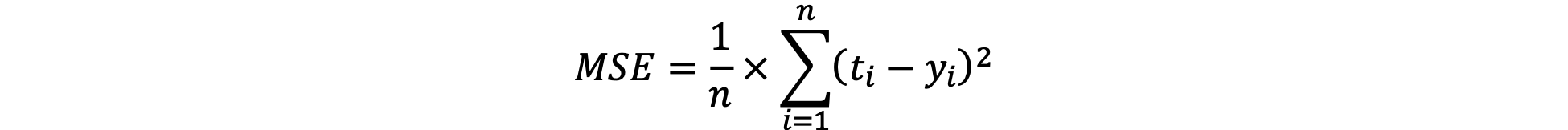

Definition: The average squared difference between targeted and actual model values.

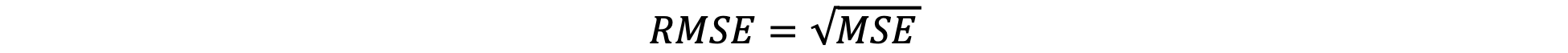

Definition: The square root of the MSE, providing a more interpretable scale (same unit as the target variable).

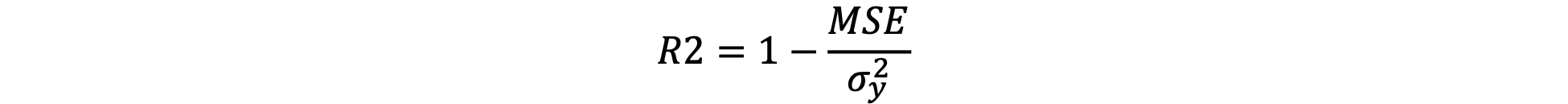

Definition: The proportion of the variance in the dependent variable that is predictable from the independent variable(s).

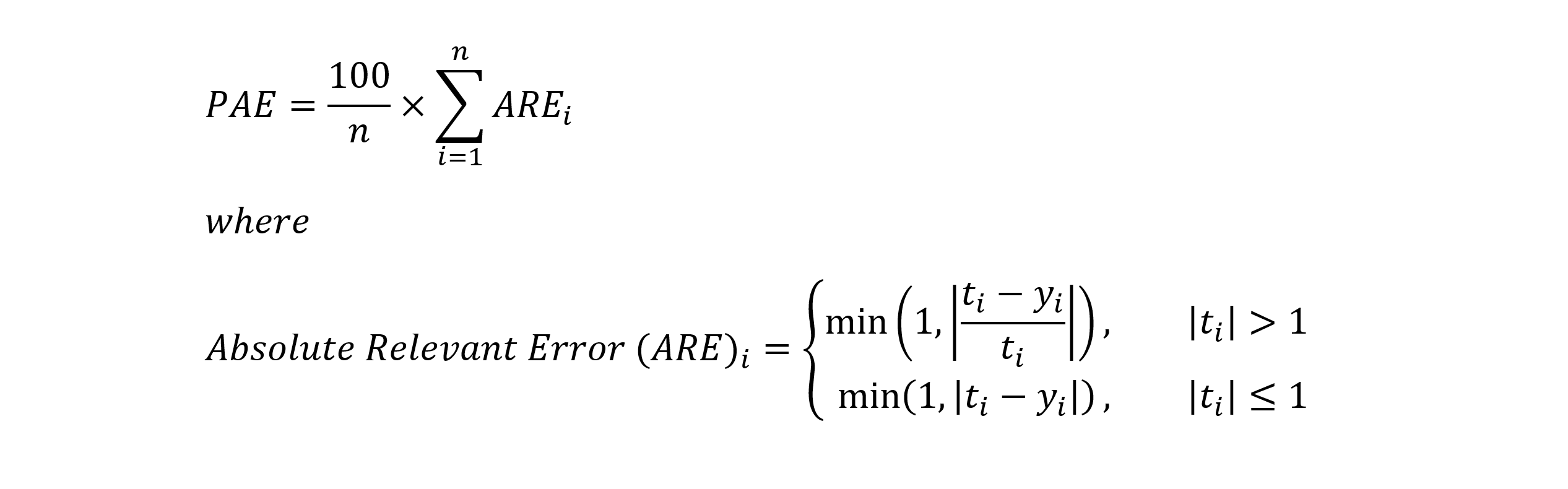

Definition: Building on Mean Absolute Percentage Error (MAPE), a commonly used metric for evaluating the accuracy of forecasts or predictions, which measures the average absolute percentage difference between the predicted and actual values, we have defined %Prediction Absolute Error (PAE) as follows:

PAE expresses prediction errors as a percentage of the actual values, making it easier to interpret and compare across different datasets or forecasts. A lower PAE indicates better accuracy, with a PAE equal to zero indicating a perfect prediction, where the predicted values exactly match the actual values.

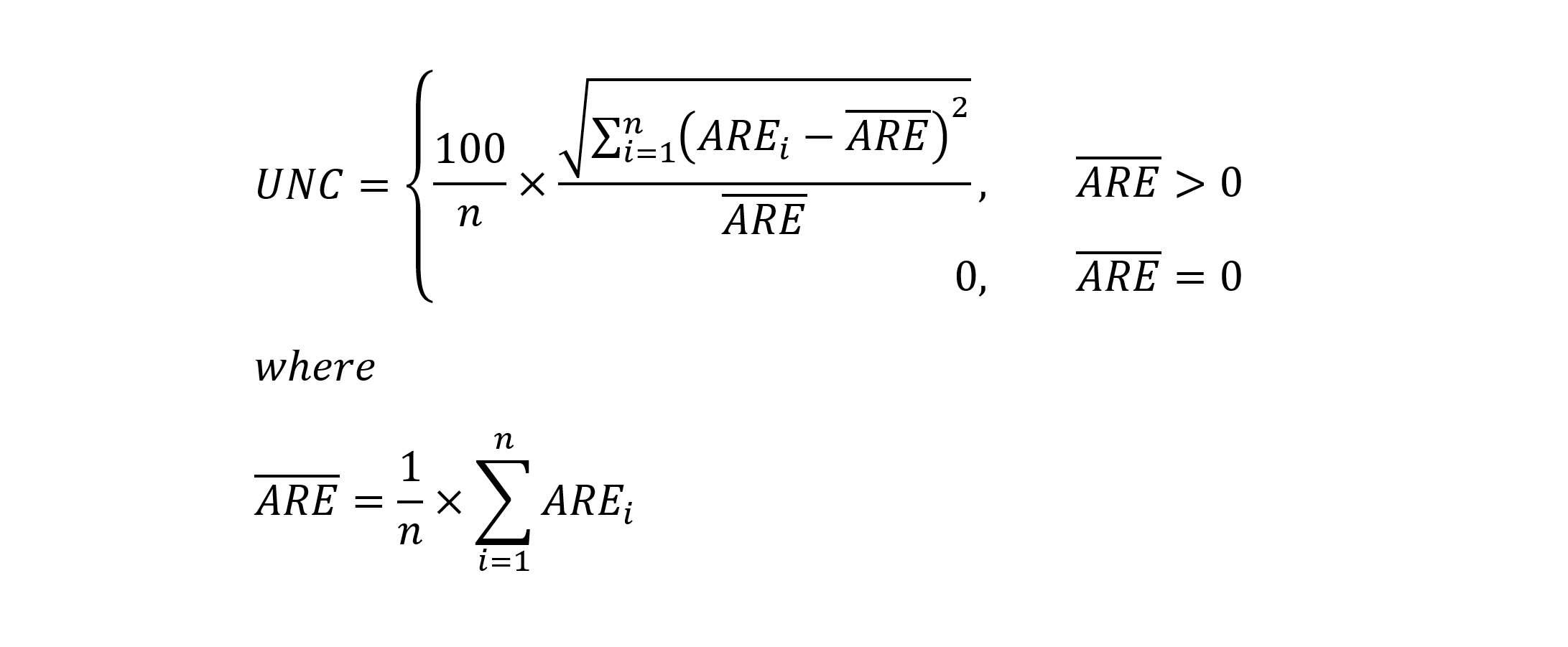

Definition: The %Uncertainty (UNC) is the relative uncertainty of the mean Absolute Relevant Error (ARE). It provides insight into how much variation there is in the forecasted (prediction) error relative to the overall average error, and is defined as follows:

A higher UNC suggests that the forecasted (or prediction) errors are more dispersed around their mean, indicating greater variability or uncertainty in the forecasts (or predictions). Conversely, a lower UNC implies that the errors are closer to the mean, indicating less variability or uncertainty.

Definition: The total number of incorrectly classified examples.

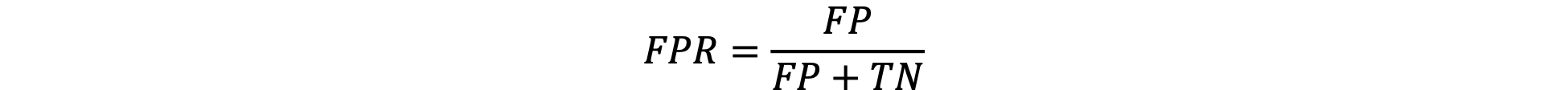

Definition: The ratio of false positives to the total negatives.

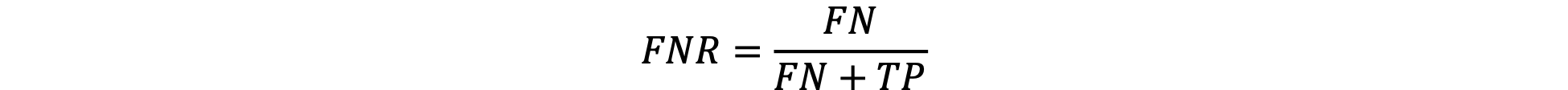

Definition: The ratio of false negatives to the total positives.

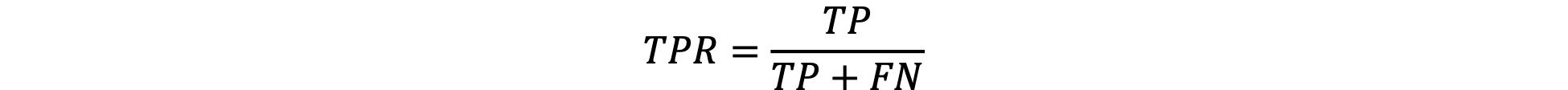

Definition: The ratio of true positives to the total positives.

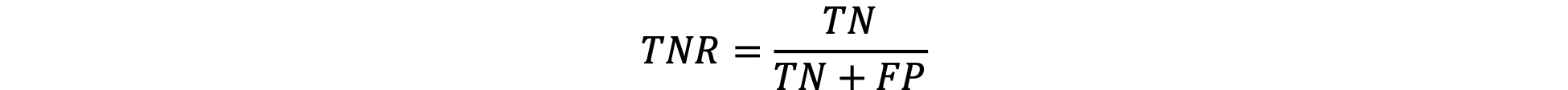

Definition: The ratio of true negatives to the total negatives.

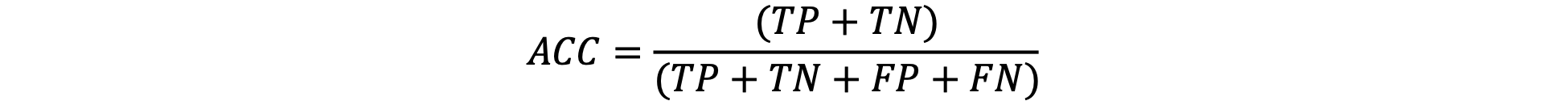

Definition: The ratio of correctly classified instances to the total instances.

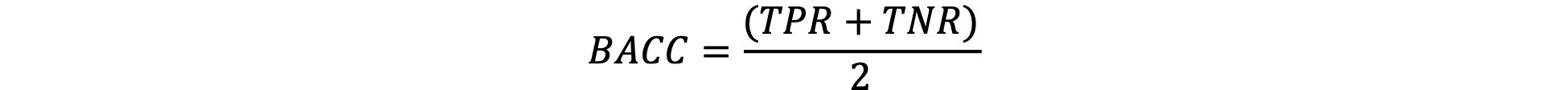

Definition: Balanced accuracy accounts for imbalanced datasets by averaging TPR and TNR.

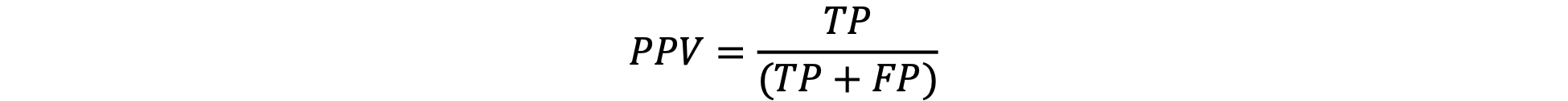

Definition: The ratio of true positives to the total predicted positives.

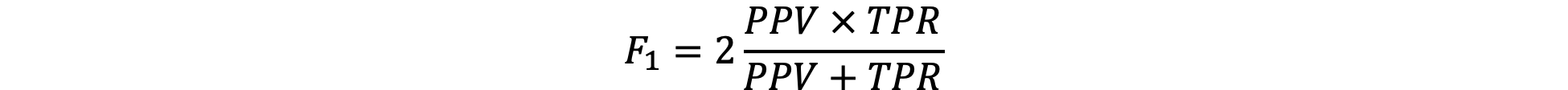

Definition: Harmonic mean of precision and recall, useful for imbalanced datasets.

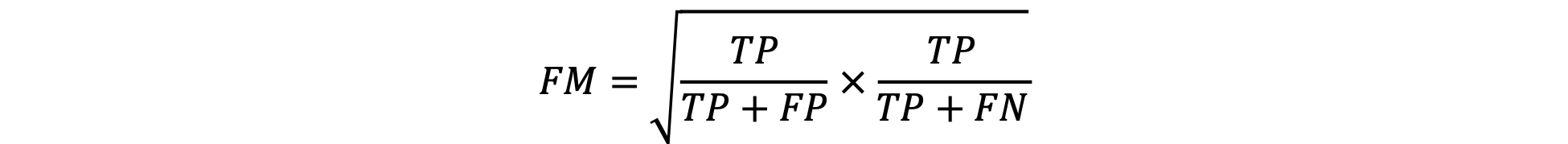

Definition: Geometric mean of precision and recall, considers both false positives and false negatives.

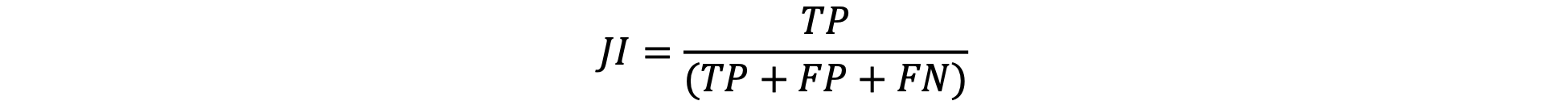

Definition: The ratio of the intersection to the union of predicted and actual positives.