These functions introduce complexity and non-linearity to the network, enabling it to learn intricate patterns and relationships within the data. Activation functions are a fundamental component of the forward pass in neural networks, influencing the network’s ability to capture and represent complex mappings.

The choice of activation function significantly impacts the performance and training dynamics of a neural network. Different activation functions have unique characteristics, affecting the network’s ability to converge during training, and capture diverse patterns in the data. Selecting the right activation function is a critical decision that can influence the overall effectiveness of the neural network in solving specific tasks.

The Xdeep Core© serves as the powerhouse within the Xdeep AI platform, adeptly managing the challenges posed by vanishing or exploding gradients across various activation functions and neural network architectures. Additionally, it excels in identifying and implementing the optimum neural network architecture for optimal performance.

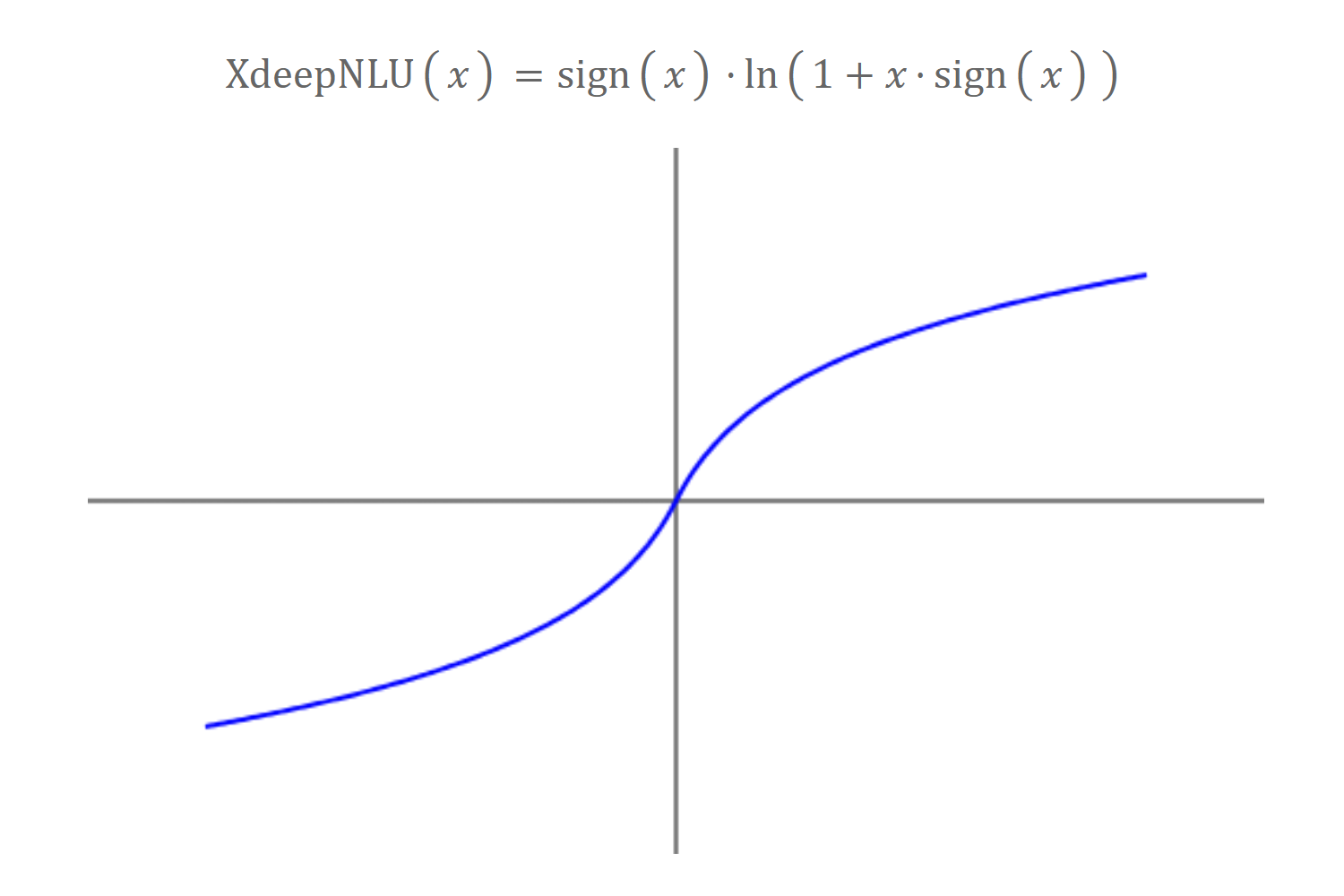

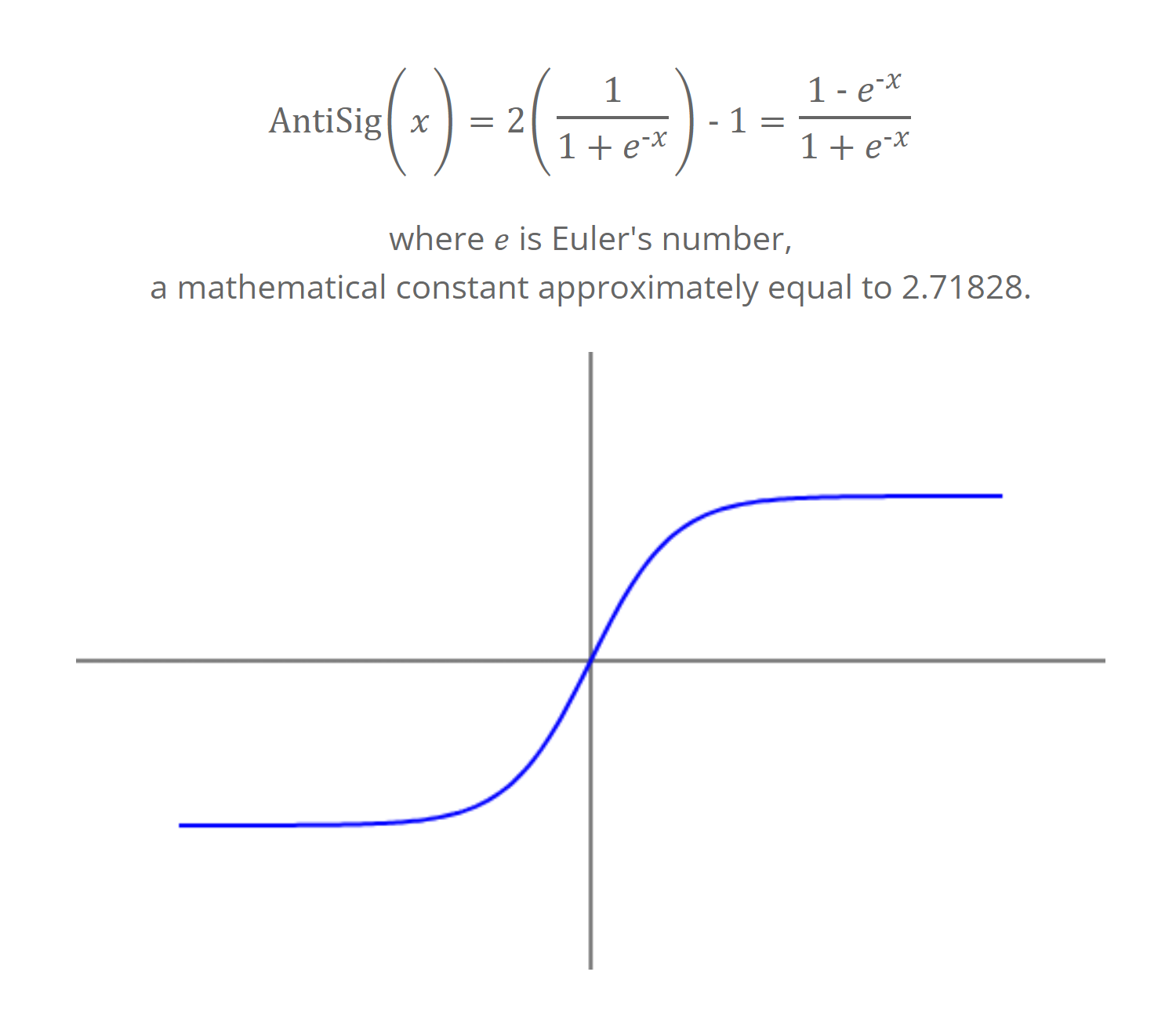

The Xdeep non-Linear Unit (Xdeep NLU) uses an antisymmetric unbounded function to introduce non-linearity, providing a unique transformation for neural networks. It is defined as:

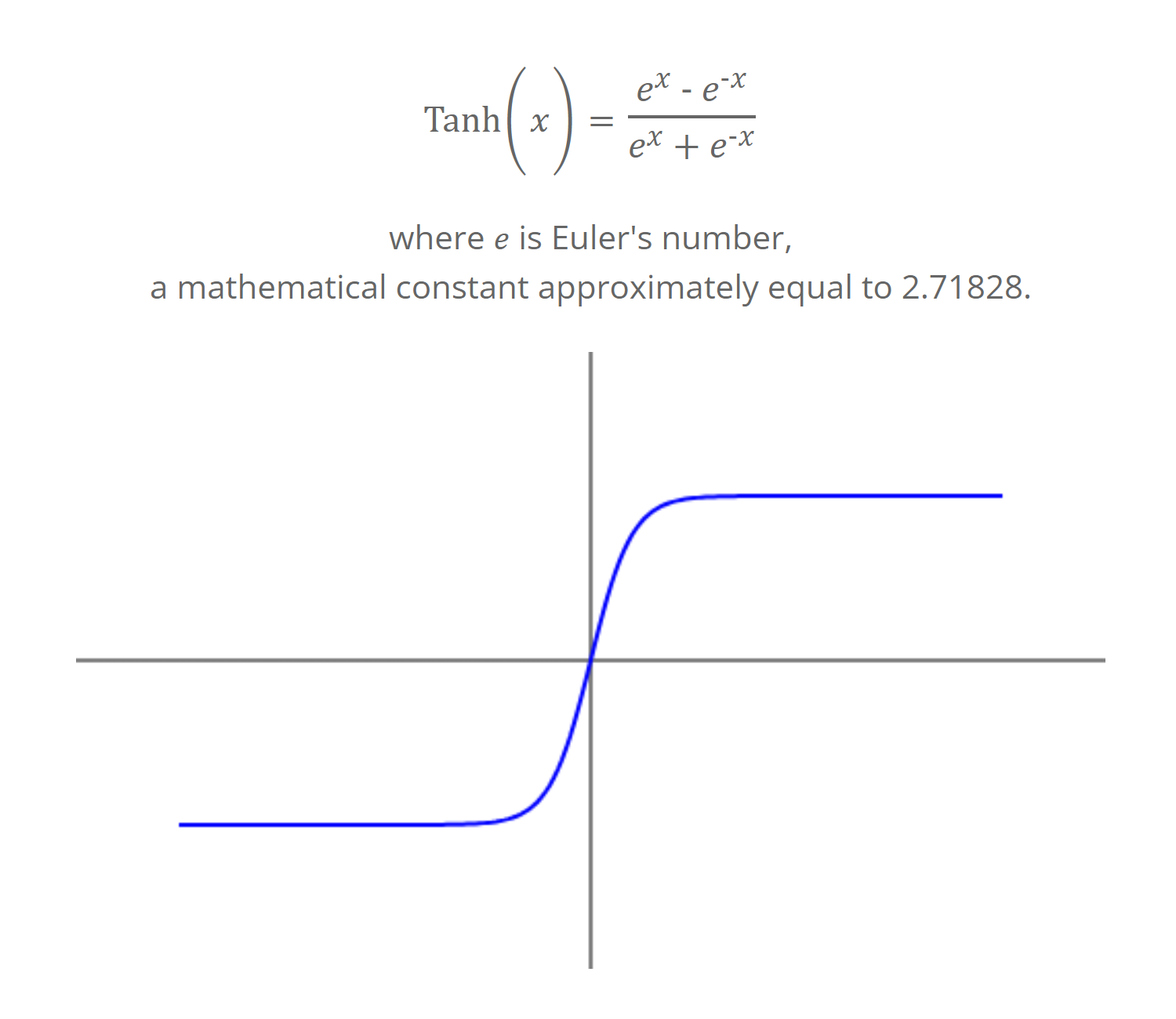

The hyperbolic tangent function, denoted as tanh, is a function that maps real numbers to the range (-1, 1). It is defined as:

The hyperbolic tangent has been a historically popular choice as an activation function in neural networks, predating the widespread use of rectified linear units (ReLU). Although ReLU has gained prominence in recent years, tanh remains relevant, and continues to find application in various architectures. An advantageous property of the tanh function is that its output is zero-centered. This characteristic aids in the effective modeling of both positive and negative input values, enabling the neural network to learn complex patterns and relationships.

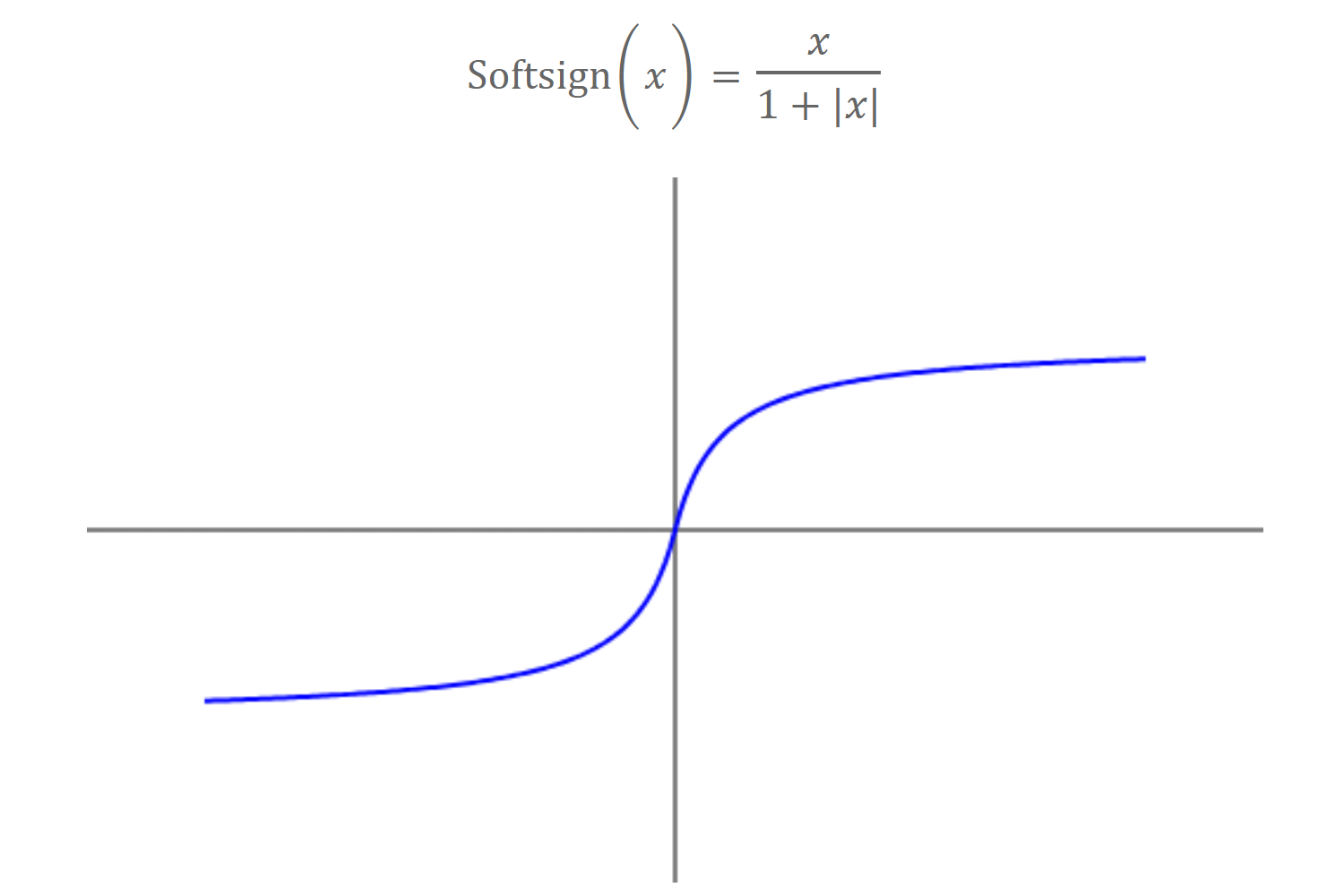

Softsign is an activation function commonly used in neural networks. It is a smooth, non-linear function that maps the input values to the range (-1, 1). It is defined as:

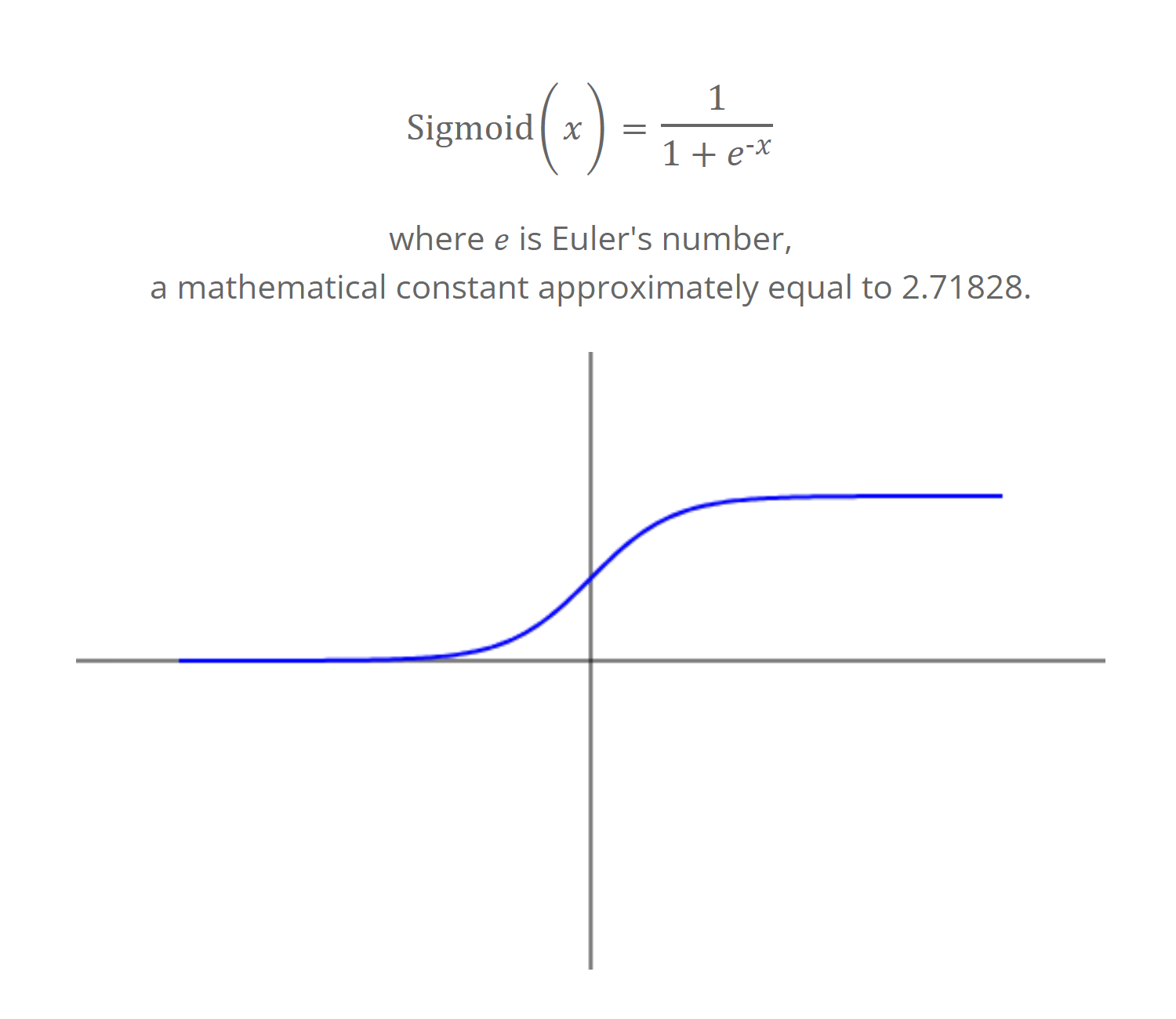

The sigmoid function, often referred to as the logistic function, is a widely used activation function in neural networks. The sigmoid function has an S-shaped curve with an output which ranges between 0 and 1. It is defined as:

By multiplying the output of the sigmoid function by 2 and then subtracting 1, we get a transformed version of the sigmoid function that is antisymmetric about the origin. This function produces outputs ranging from -1 to 1. This transformation might be useful in certain contexts where an antisymmetric activation function is desired, though it’s less commonly used compared to other activation functions like Tanh. Mathematically, this transformation is represented as:

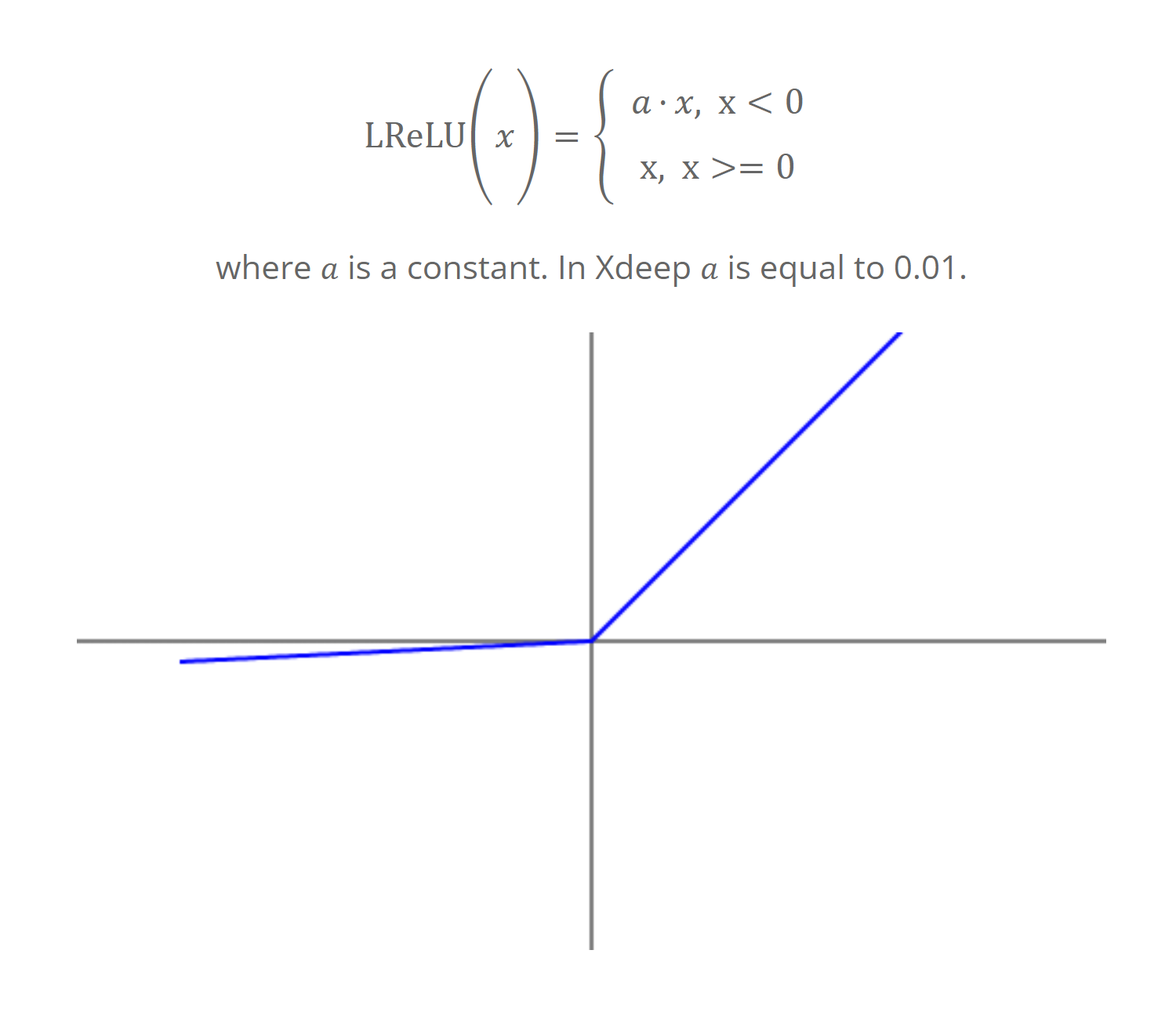

The Leaky Rectified Linear Unit is a function that generalizes the traditional rectified linear unit with a slope for negative values. It is defined as:

The Exponential Linear Unit, is a function that has negative values. ELUs saturate to a negative value and thereby ensure a noise-robust deactivation state. It is defined as: